Models are not reality

Insights on mathematical models from a recent book

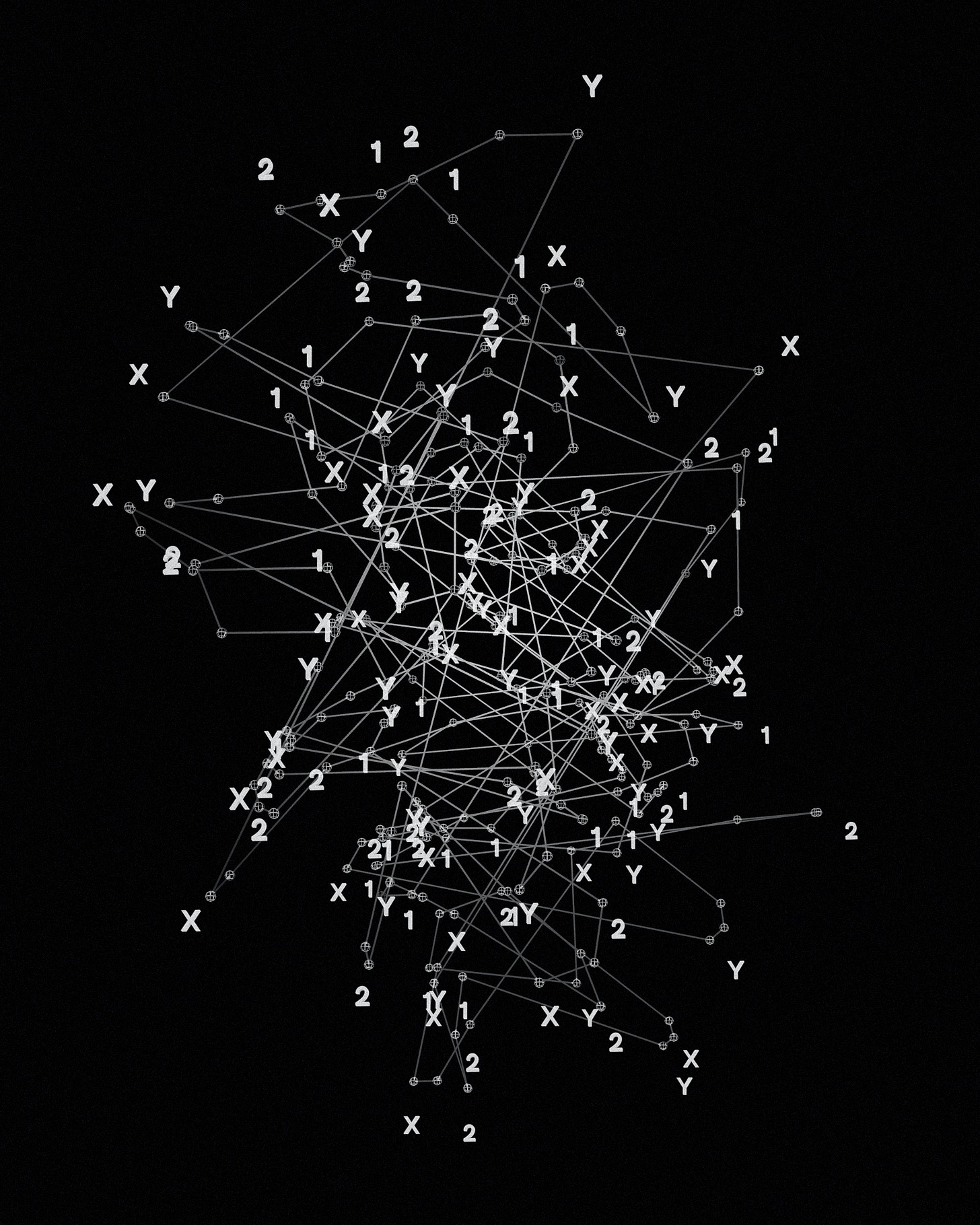

As our knowledge of the world is built from our theories, pictures and models of reality, we often end up mixing up our theories for reality. In her recent book, Escape from Model Land, Erica Thompson explores one particular case in significant detail - mathematical models. These models are increasingly prevalent in our modern world, whether in finance, epidemiology, climate research or online algorithms. As Thompson argues, we often wander into Model Land, where "all our assumptions are true" and "uncertainties are quantifiable",1 but then forget that this isn't the real world and don’t know how to escape. Her book explores many themes that have surfaced here at Humble Knowledge and, despite being about mathematical modelling, is not technical and is an easy read.

Thompson's starting point is that any mathematical model is, by definition, a simplification of the world it is trying to represent.

If we are going to fit our complex world into a box in order to be able to model it and think about it, we must first simplify it.2

The obvious conclusion is one covered here previously: models can never capture everything about the world, and so can only be limited in their application. However, the book fleshes out a number of interesting insights that add to various posts here. While I recommend you read the book, the following are some of the most interesting insights.

Limits and metaphors

It might seem trivial to note that the process of building a mathematical model always involves someone making decisions about how to simplify reality. However, whether these decisions are made due to the availability of data, computability, the current state of theory, or for any other reasons, the mathematical model always reflects the decisions of the modeller(s) who built it. This means that it builds in their assumptions, theories and biases.

We often like to think of a mathematical model as a neutral, mathematically objective analysis of the world. However, as Thompson emphasises, the fact models instantiate the decisions and biases of the modellers mean that they are not neutral, objective representations of the world, no matter how rigorous or sophisticated the calculations involved are. One consequence of this is that the outcomes of modelling studies often just express the assumptions that went into the model design, and are not independent discoveries.

To pick one example used in the book,3 the findings of various modelling studies in 2020 and 2021 that forms of social distancing would reduce the death toll from covid were not surprising, independent results. The relevant models were built on the assumption that reducing contact between people, i.e. social distancing, would reduce virus transmission. Therefore these studies were always going to come to the conclusion that social distancing would reduce the death toll, even though there is mixed real world evidence about the impact of distancing.

Thompson also drew on epidemiological modelling of covid to illustrate another common dynamic of modelling. Given a mathematical model always involves a simplification of the world by someone, it always focuses on some aspect of reality at the expense of others. Thus, for example, common epidemiological models focused on the transmission and rates of covid infections and ignored other health factors - like mental health or the impacts on other diseases.

This is unavoidable when we build models and leads Thompson to make a provocative suggestion: mathematical models are really just elaborate metaphors. As she points out:

the act of modelling is [largely] an assertion that A (a thing we are interested in) is like B (something else of which we already have some kind of understanding).4

This is the identical logical structure to a metaphor. In the case of modelling, B may be a mathematical construct but, as with a metaphor, it explains some aspect of reality by capturing some important features but leaving many others out. In other words, both metaphors and mathematical models are limited and break down when we try to push them too far.

For mathematical models, Thompson emphasises that we build them with a specific purpose in mind. They are always to model particular types of phenomena and answer particular questions. For example, epidemiological models of covid were focused on the spread of one disease over a period of time but couldn't help us, among many other things, understand the health impacts on daily life of the same disease.

Alongside these two structural limitations - models instantiate the views of the modellers and have similar applicability to metaphors - there is a relevant technical issue. Given there are gaps between models and the real world, it is worth noting where these gaps, or errors, arise. There are two type of systematic errors to take note of.5

The first is that the data we put into models is often inexact or imprecise. This might seem to be a small issue, except that many important models follow the real world in being mathematically chaotic. This means that, by what is known as the Butterfly Effect, very small inputs can lead, over time, to huge differences in results. The good news is that we can account for and quantify these uncertainties reasonably well, usually by running our models based on plausible ranges of input data that capture the likely real world differences. This is how weather forecasts work, for example.

There is, however, another more foundational uncertainty Thompson refers to as the Hawkmoth effect. Just as small errors in the data can lead to large differences in outcomes, small errors in the models or equations can likewise lead to large outcome differences. However, these errors aren’t quantifiable as we don’t know where in the equations the errors might lie and it is practically unfeasible to construct many iterations of possible models. This is not a reason to discard models, but rather to be more sensitive to the gaps between models and reality.

Getting lost

One big danger of entering Model Land, on Thompson's account, is that we often forget that models are metaphors and we rely too much on models. Instead, we should be thinking seriously about the relationship between the models - simplified metaphors that help explain the world - and what is happening in the real world. Thompson identified a few ways we forget we are living in Model Land.

One challenge is that people with technical expertise who work closely on models often find that the structure and logic of the models influences their understanding of the real world. They become so comfortable working with the models that to them the world comes to look like the models. Related to this is the way that the outcomes of modelling studies are often reported as facts about the real world, without recognising the inherent uncertainties in models.6 Covid headlines, nutritional studies and areas of climate research are good examples where model results are reported as though they describe reality.

One particular example that came up through the book was the way that the many medical studies done in mice are really modelling studies. Mice, for medical purposes, are related and similar organisms that allow us to draw some conclusions about human health, but are simpler in many ways. In other words, mice are organic models of humans. Yet, again, results from mice studies are often extrapolated directly to humans without clearly stating the gaps.

Mathematical models also don't exist independently of the worlds they are trying to describe, and often help create or shape reality. One example was that, through the 1970s and 1980s, financial investors believed that the Black-Scholes equation defined the fair market price for various trades.7 This meant that investors would buy if things were undervalued and sell if they were overvalued according to that model. As a result, the Black-Scholes equation became the model that described reality because all the players involved acted as if it did.

I would add a related example from the covid pandemic. All the early modelling that captured attention was built on models of cases and deaths from covid. These metrics, and the graphs that were modelling outputs, came to frame how we thought about and tracked the disease. As a result, newspapers and governments worldwide produced data and graphs of real world data that matched the previous model outputs. The models shaped our view of reality and drew attention away from a range of other important factors.

Escaping from Model Land

When we get stuck in Model Land, we can be blind to many things that matter. So we need to think clearly about when and how to enter Model Land - which houses many powerful and useful tools for understanding and predicting reality - and when and how to leave it. Thompson leans heavily on the concept of expert judgement as the way to take insights from models and apply them in the real world.

While this might explain how we humans work in practice, and even if we seem to be, as Thompson argues, good at it, it doesn’t engage with many serious concerns about human judgement. We often turn to mathematical modelling to remove human biases from our analysis and yet Thompson argues we need to, in effect, reintroduce these potential biases.

What this argument misses, and Thompson doesn’t note, is that human judgement can bridge the gap between Model Land and the real world because we humans have a lifetime of lived experience in the real world. Models, like AI, have no direct experience of or connection to reality, and so cannot tell the difference between profound and nonsensical results. Our lived and trained experience, on the other hand, enables us to sort between these, albeit not with perfect reliability.

Given we need to rely on human judgement, Thompson offers some useful principles that, I think, are applicable more generally than just mathematical modelling. All of these are built on the idea that models are really metaphors, or, to phrase the idea differently, partial representations that capture some aspect of reality. She gives us five useful principles:8

1. Define the purpose. Models, like metaphors, are built and used to illustrate reality in a particular context and for a particular purpose. We therefore need to define the purpose clearly and keep it in mind when we take model results and apply them.

2. Don't say 'I don't know'. Metaphors don't have to be right to give us useful insights about the world. Likewise, the fact a model isn't right doesn't mean it doesn't tell us anything useful.

3. Make value judgements. There is no neutral, objective perspective, so make your value judgements explicit rather than pretending you aren't making any.

4. Write about the real world. A model, in and of itself, is not helpful unless we can describe its expected relationship to the real world, including likely sources of uncertainty.

5. Use many models. Relying on one model, or one family of models, means that we blind ourselves to the baked in assumptions. Use as many different and good models as you can to give different perspectives.

All of these principles embody humility about our knowledge and, if we substitute theory or hypothesis for model, are applicable to a range of fields - not just mathematical modelling. I may have quibbles about some of these, but am keen to get readers’ reactions in the comments. This will be a topic for a future follow up to my previous post on practical epistemic humility.

Thompson, E., Escape from Model Land, Basic Books, 2022. p. 3

Escape from Model Land, p. 13

Escape from Model Land, pp. 187-189

Escape from Model Land, p. 31

Escape from Model Land, p. 140-146

Escape from Model Land, pp. 24-25

Escape from Model Land, pp. 116-119

Escape from Model Land, pp. 219-222

Excellent, with great advice at the end. I am left with a question: would it be fair to say that all human decision making involves the use of models, even if they are not mathematically based? Specifically, I am wondering if the narratives we use to create certainty around our decision making could be considered a type of modelling.

I like your drawing attention to Erica Thompson and 'Model Land'. I have become something of a fan of her and the close professional colleagues with whom she discussed the book in public when it came out. (Comes out in paper back in UK at the end of this November.) Additionally for me her interview with Nate Hagens fairly early on in his series 'The Great Simplification, was particularly effective and touched on more than her career as a senior policy fellow at the London School of Economics.

Personally I think metaphors do something more than similies and potentially open up conversations about our observation of the world. Humans do particular kinds of conversation and these can provide the chance to unpack insight. 'Illumination' is an important aspect of reality. (Hawkmoth c.f. butterfly is suggestive.)

I listened recently to a conversation where one person said: "I am a scientist. I believe in causality." They were talking about acknowledged observations that had no ready causal explanation of reality. My guess is we are stuck by nature with 'cause and effect', although the concept does not embrace a universal truth. I am grateful for humble knowledge as we go along ... smile.