AI sees patterns. Humans see things.

Another fundamental difference between how humans and machines operate.

At the start of the this year, an amusing story about AI image detection went viral. In a development test, researchers spent a week training an AI detection system on the movement patterns of US Marines. They then set up the system in the middle of a traffic circle and challenged the Marines to approach it undetected. Every Marine involved succeeded by adopting what we might call childish tricks - somersaulting instead of walking, hiding in a big cardboard box or dressing up as a tree. Once again, while digital and AI technologies are vastly superior than humans in some sophisticated problem solving situations (like Chess or Go), they struggle to do many things that toddlers manage easily.

It is often thought that this is a data processing issue, or that we don't have sufficiently sophisticated algorithms. Instead, it shows how humans and computers process information very differently - and in a different order.

How machines process information

It is fairly well known that machine translation services rely, fundamentally, on statistical techniques. Even when based on sophisticated neural networks, they translate based on statistical predictions of the most likely combination of words that correspond to the input.1 Humans, on the other hand, think and translate depending on the meanings of words and sentences. Machine translation is built on data (and the more the better) while humans rely on understanding.

There is a similar dynamic at play with image detection, or ‘seeing’.

AI image or object detection systems are built on top of lots of data. For a particular object X, they are fed lots of examples of Xs (“training data”) and from that they learn to identify anything that looks like, or fits the pattern of, an X. The magic of modern AI systems is that they figure out the pattern for themselves, and humans are typically unable to understand how they do it. To see an X, an AI system looks for any pattern in their input that matches what they have been trained to identify as an X.

The way humans see is, when considering the order in which information is processed, different. This is easiest to see in edge cases, such as when lighting is dim or lots is going on. We have all had a situation where we saw something, but we didn't know what it was. Maybe it was an animal but we don’t know what sort. A natural response is to stop and think about what it could have been.

The important point here is that we first saw some thing, then we tried to identify what it was. This, I would argue, is normally how humans see. We identify what we see as objects or things first, and then categorise them. To put it differently, we see some thing first, and then figure out if it is an X or a Y. AI systems operate in the reverse order - they use patterns to identify that there is an X or a Y, and only then do they know they have seen some thing.

We can see this in the story above. The AI system was looking for Marines but, because nothing it saw fitted the pattern of a Marine in its database, it didn't see any. Humans, even toddlers, would look at a tree or a cardboard box in an odd place - they would see the thing - and then try to decide what it could be in the context. Given the situation, the obvious conclusion would be that it was a Marine trying to hide.

To put it differently, it wasn’t that the AI system saw the cardboard box or the tree and decided it wasn’t a Marine. Instead, nothing in its inputs matched the pattern of a Marine that it had learnt and so it didn't see anything.

This is a structural information processing difference that suggests some limits to the usefulness of AI. Most simply, AI systems will likely always struggle when they are faced with something new that doesn't already exist in their training data. They often literally don't see objects they don't recognise - which is a problem, for example, in the development of safe automated vehicles. We can expect driverless cars to be safe in very common situations, but accidents normally happen when there is something unexpected. Work is going on to address this by developing artificial driving data so the automated systems have enough training data for any foreseeable situation. The question is, however, whether we can foresee all the situations we care about.

Can we teach computers to see like we do?

I expect we will never be able to 'fix' this situation by teaching computers or AI to see like humans do, for a few reasons. One is that we carry around a model or map of the world in our heads. It often isn't in high resolution but we have default expectations about what we are going to see and where. We often notice things that are out of place much more clearly than we see what we expect to be there - which biases us to deal better with new situations that might be dangerous. A further aspect to this is that our model of the world is connected to our experiences of living in three dimensional space. We have explored and manipulated things in the real world and this feeds back to what and how we see.

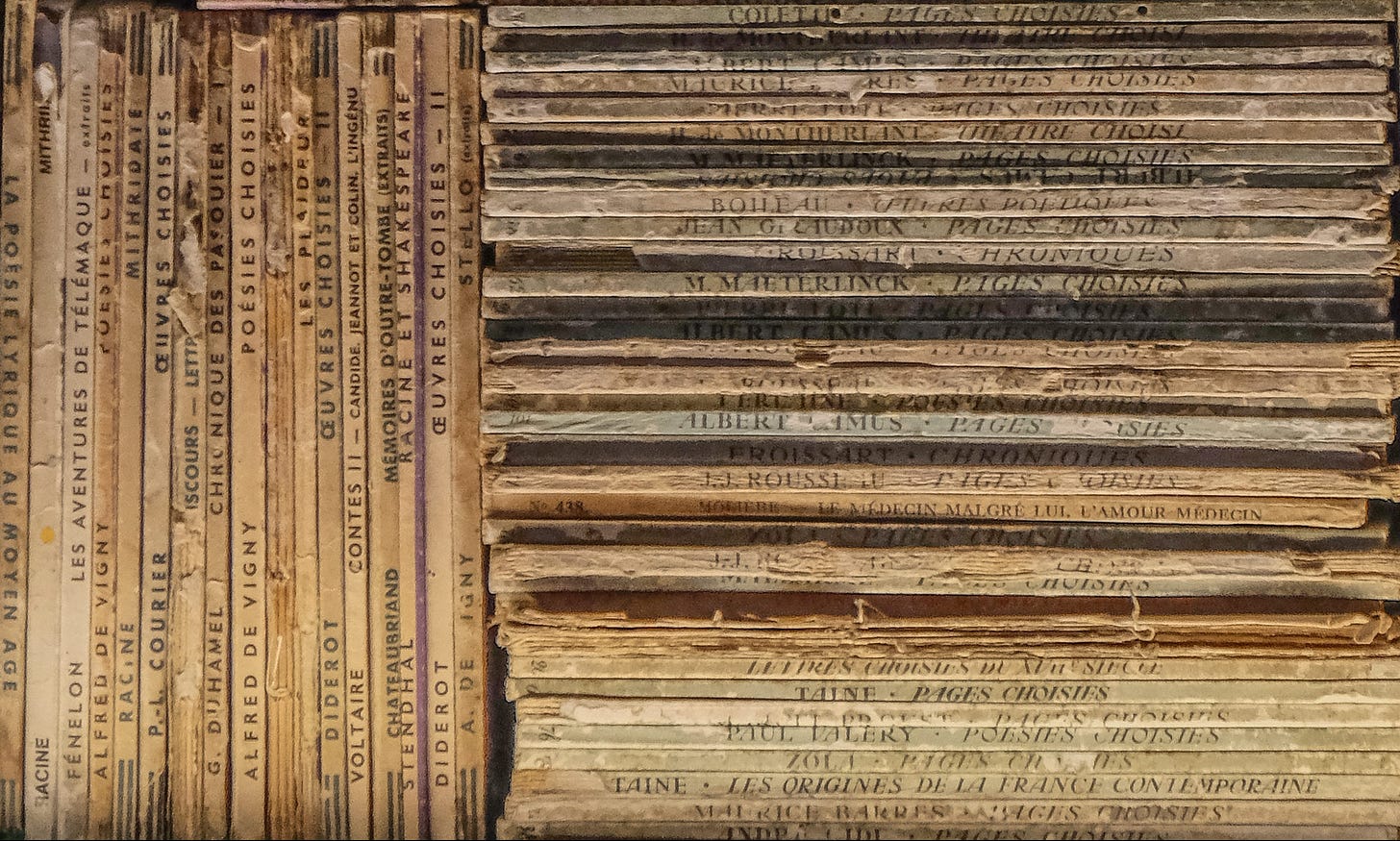

A second factor is we seem to have some kind of innate skill in our ability to see things as particular objects. As humans, we are very capable of differentiating the world into different, internally connected, things or objects. Take for example this picture:

I'm not going to test you on this, but how many different objects are there in the picture? That might be tedious but it isn't a hard question for a human. It is a far harder question for an AI system. If you reflect on how you know, I expect you’d identify something instinctive - I can just see the different books. But we also have a second system at play to help - our contextual knowledge of what books or journals are like in the real world. Some of it might be physical (how books decay over time or how shadows work), and some might be cultural (titles are written on the spine in a single line). None of these are easy to teach to an AI system, and some may not be possible to teach.

In other words, AI systems structurally operate in a different way to humans: AI systems look for patterns and use them to identify objects; whereas humans see objects and then categorise those objects. This difference, plus previous ones I have written about, mean that AI will never be able to replace what humans do in the way we do it. There will be many things AI can do better than humans, but there will also remain other things that humans can do and AI can't.

At their core, generative AI programs like ChatGPT, and the Large Language Models they rely on, use the same type of approach.

Love this. Suspect it might provide a useful insight into how and when to place bounds on the use of AI .